To celebrate my fresh Snowflake certification, I figured I have to write something about Snowflake database. I decided to test its integration with Azure Data Factory (ADF). First you have to create a new Linked Service within ADF. Snowflake can be found from ”Services and apps” category, not from databases.

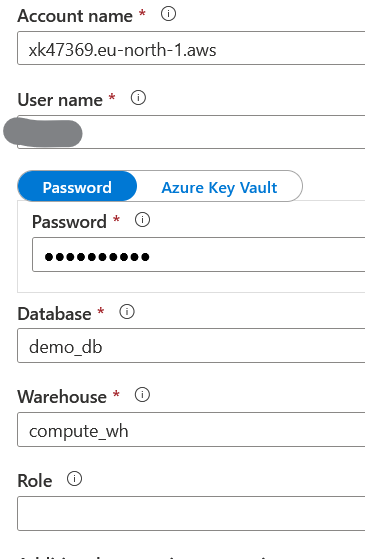

My Snowflake is in AWS, but I can connect it also from Azure. Your Snowflake could reside also within Azure and Google Cloud Platform. Account name is the same as can be seen in Snowflake web UI, but without suffix ”.snowflakecomputing.com”.

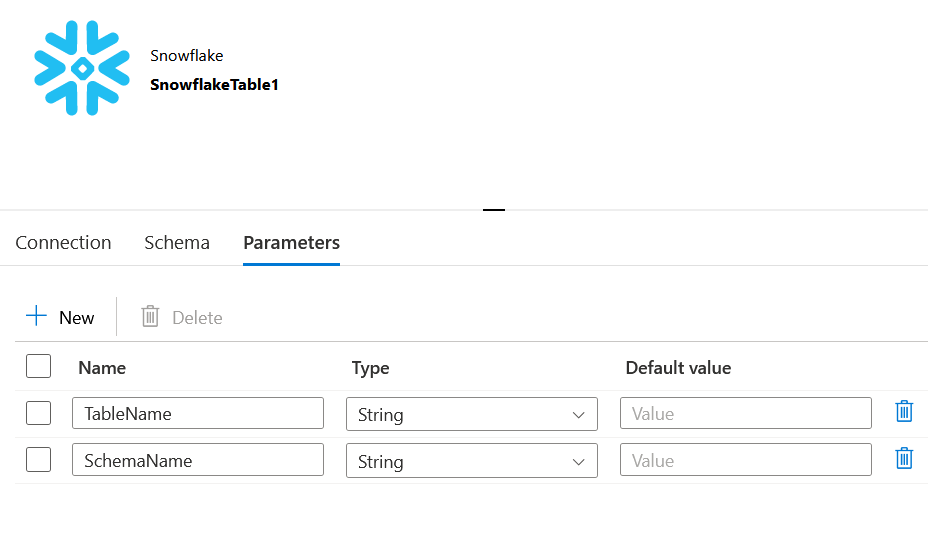

Next I created a dataset which uses above mentioned linked service. I added to two parameters, SchemaName and TableName to make it re-usable.

I refer to these parameters when defining dataset’s table-value.

In the Source-tab I can define my own query to retrieve data.

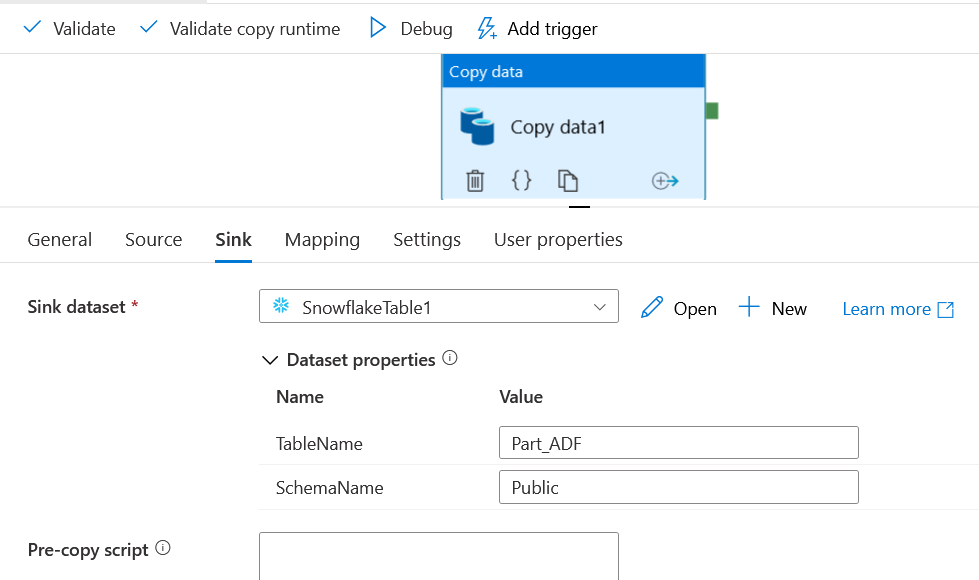

I created a metadata copy of my original Part table using Snowflake function ”Like”: Create table Part_ADF Like Part. Then I used this table as my Sink in Data Factory.

I need to enable staging for my load and I used my Azure Blob Storage as my staging area. This led to following error message: ’Snowflake copy command not support Azure Blob Storage auth type as ’not SAS’. Then I tried using Azure Data Lake Gen2 storage account, but got a message that it is not supported with Snowflake.

Next I tried creating a new blob storage account, activated Shared Access Signature and created a new Linked Service into ADF. From portal retrieved (Blob Service) SAS URL already included required token, so token field is left empty.

Finally I got it up and running! And it failed after 11 minutes… ”Error accessing remote file ’https://terosasstorage.blob.core.windows.net…” I tried to use root of the storage account, but it didn’t work. Next I created a container and used that one as a temp folder. That worked, but produced next error: ”Snowflake only support the account hosted in azure platform while as sink connector, please make sure your account is hosted in azure, current region and platform is ’AWS_EU_NORTH_1′”. I appreciate clear error message. I can’t use ADF to load data to Snowflake unless it is within Azure cloud. That rules out AWS and GCP. My Snowflake is in AWS Sweden region, my Azure resources are in North Europe region (Ireland).

Nevertheless I’m able to read from AWS Snowflake, which leads to my next test. I admire Snowflakes ability to share data securely without copying the actual data by using share functionality and creation of reader account. I wanted to test is it possible to connect to this shared data using reader account access. For those who don’t know, creation of reader account in Snowflake, creates also new url, which user should use for accessing shared data.

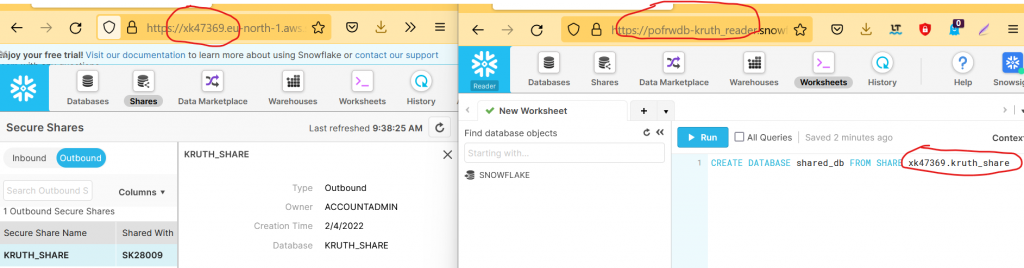

First I created a new database, a share and a reader account in Snowflake and connected the reader account to my share.

Next I created a secure view pointing to my Part-table. ”Create secure view kruth_share.public.part as

Select p_partkey, p_name, p_mfgr, p_brand, p_type, p_size, p_container, p_retailprice, p_comment From demo_db.public.Part”.

Point in creating a secure view is that a user can’t see the code behind it. Still I needed to grant rights to that view: ”grant select on view kruth_share.public.part to share kruth_share”. But that didn’t work. I got an error message: ”A view or function being shared cannot reference objects from other databases.” Oh, that’s a bummer. But luckily I found a solution for that problem. You need to grant reference usage privileges to required database for your share: ”GRANT REFERENCE_USAGE ON DATABASE DEMO_DB TO SHARE KRUTH_SHARE”. After that I was able grant select permissions to my share.

Following task was to log in to my reader account and create a database from shared data. On the left side is my original account UI and on the right side is my reader account UI. Notice different URLs and how I used original account name and share name to create a database on my reader account.

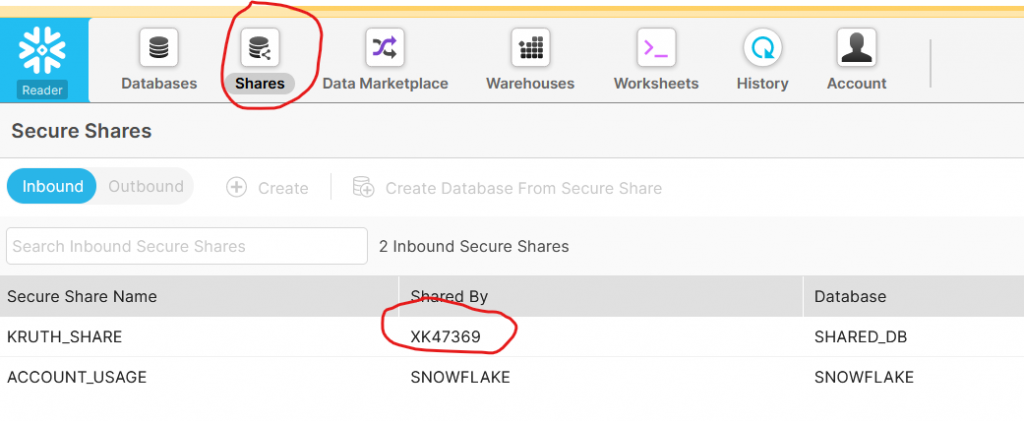

Share information can also be found from reader account by clicking Shares button.

Also virtual warehouse for compute needs to be created for reader account within reader account, but note, the bill goes to share provider.

Finally I created a new linked service in Azure Data Factory which pointed to my reader account Snowflake. For role I assigned highest level role accountadmin, because default is sysadmin and I hadn’t assigned privileges to my sysadmin role to access my new database. Of course, other way would have been just to assign required permission to sysadmin role. I created a copy activity to copy from Snowflake reader account to my Azure Data Lake and executed it. Voilà, now I had my data from Snowflake reader account in Azure Data Lake.

This Snowflake data sharing seems to be very valid method to share data outside organization without a need to copy and send it separately. Next I’m planning to find how to connect ADF to AWS S3, because it seemed to be a little bit complicated.

Hey, I think your blog might be having browser compatibility issues.

When I look at your blog in Firefox, it looks fine but when opening in Internet Explorer, it has some overlapping.

I just wanted to give you a quick heads up! Other then that, very

good blog!