My previously created Azure Machine Learning retraining and scoring model created with Azure Logic App and PowerBI (here is more info https://www.kruth.fi/uncategorized/azure-machine-learning-retrain-running-r-scripts-with-power-bi-and-some-dax/) stopped working last January. I didn’t have enough motivation until now to start digging to find out what was wrong. Reason revealed to be removed component from Azure Logic App – namely Azure ML component. It just doesn’t exists any more.

I started to investigate what can I do to replace that solution and found this article: https://azure.microsoft.com/en-us/blog/getting-started-with-azure-data-factory-and-azure-machine-learning-4/. Instructions were a little bit outdated and missing some links to Azure ML, which gaps I try to fill with this article.

This process can be separated into three parts:

- Machine Learning model retraining

- Deploying retrained model

- Using updated model to scoring

Model retraining

First step is to retrain the model with new data. For that you need Data Factory Machine Learning Batch Execution -activity.

For that you need first to create a Linked Service to your Azure ML. The correct web service is your model name without any suffix. Of course prerequisite is that you have created those web services before hand. More info about you can find from the blog post I linked in the beginning of this article.

Correct endpoint you will find using following path: click web service name, take the API key from here:

Click ”Batch Execution” and copy Request URI value:

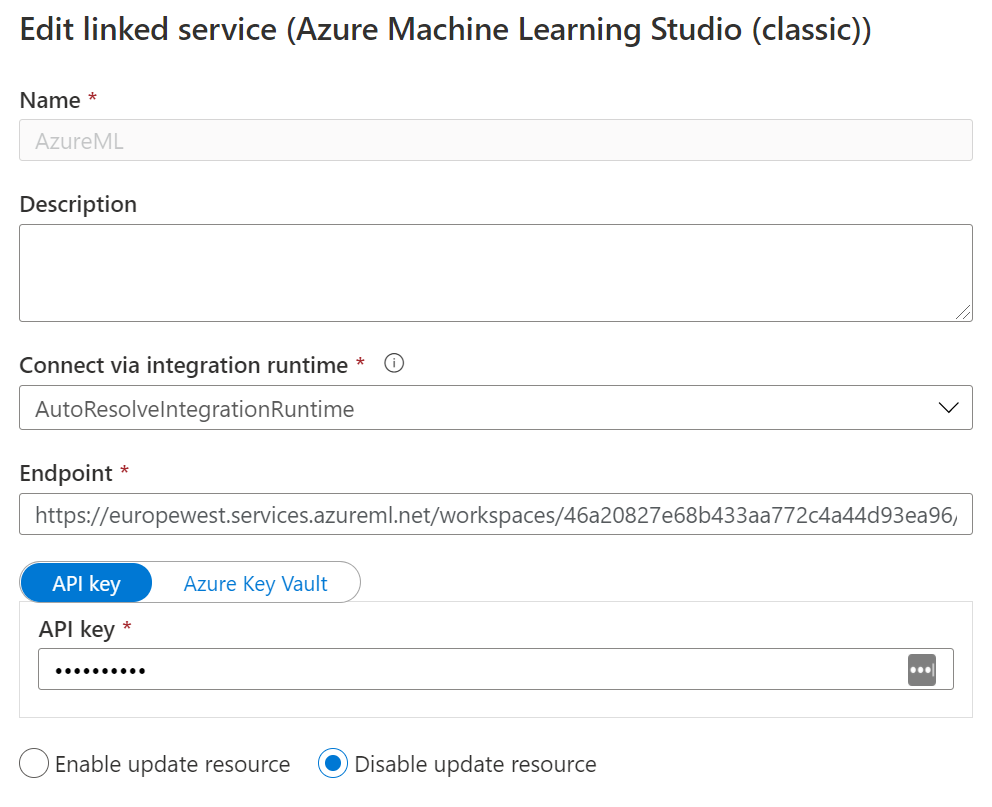

Those values you shall fill into Data Factory Linked Service:

In the settings tab for Batch Execution activity you should define input and output details. For input I use csv-file, which exists in my blob storage. For that connection you of course need a Linked Service to your blob storage.

I had real difficulties to understand what values I should use as Input Keys for my input and output, because that missing from documentation. Finally I noticed from my Azure ML web service documentation this part from where I picked up values input1 and output1:

So my Input part looks like this:

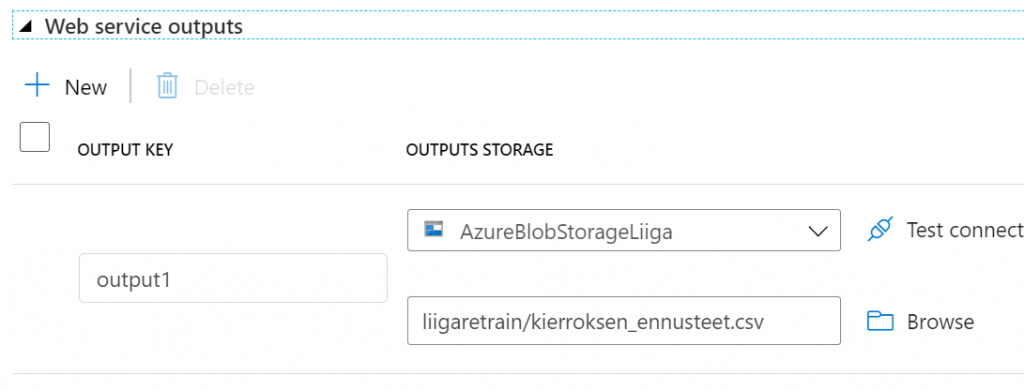

And Output looks like below. As an output web service provides ilearner-file, which is the newly retrained Machine Learning model. I save the file into same blob storage location. In other words, retraining in itself is not enough, you need also to update your current model by deploying the generated model.

Model deployment / updating model

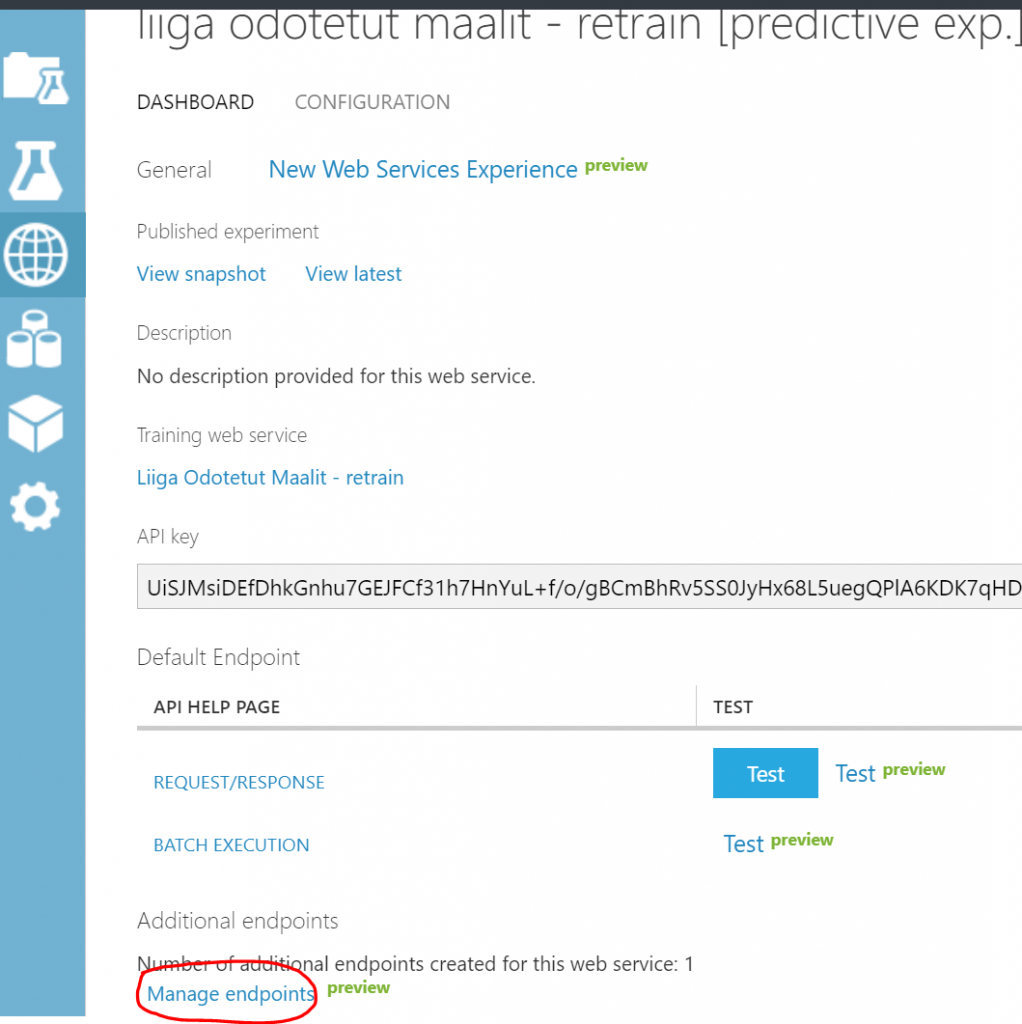

For this step you use Data Factory Machine Learning Update Resource -activity. You need first to create a new Linked Service, because this process uses different Azure ML web service. For this you need to use previously created Predictive experiment web service.

From next page click ”Manage endpoints”.

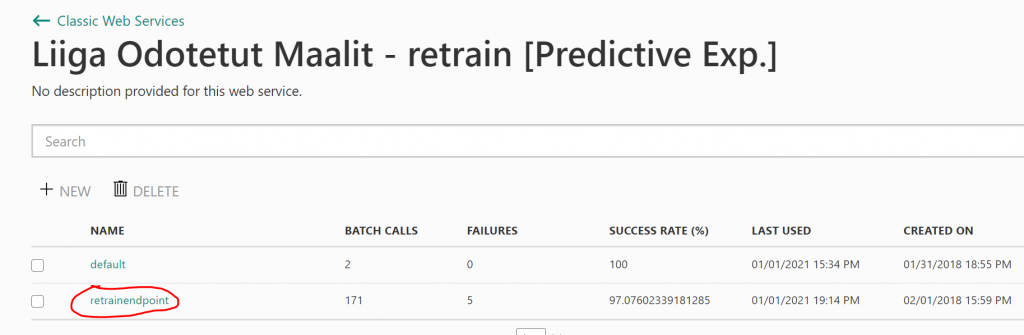

I had created created additional endpoint already previously and I had named is as retrainendpoint.

Click that one and from next page click ”Use endpoint”. From that page copy values from ”Primary Key”, ”Batch Request” and ”Patch” fields.

These values use followingly in your Data Factory Linked Service: Endpoint = Batch Requests, API key = Primary Key, Update resource endpoint = Patch.

For settings tab in your Machine Learning Update Resource -activity you fill details about your just created ilearner-file. I had challenges to define correct name to ”Trained model” -field, but luckily error message was clear enough to give me correct answer. Basically in my case it was the name of my model with ”[trained model] suffix.

Using updated model for scoring

In this step you again use Data Factory Machine Learning Batch Execution -activity. You can use same Linked Service as created in previous step. I created a new one, because I tested many things, but as an end result from my tests, it seems I’m using the same endpoint as in previous step. If it does not work for you, try to create a new one.

In setting tab you define input and output values. As an input I give csv-file containing games for the next round as my model expects. Output file I store to my blob storage.

Output file looks like below. Model is predicting home team Karpat to score 3.14 goals and away team Jukurit to score 1.43 goals.

I have described in my previous post next steps to generate betting odds. For this game they look like this:

Hope this post helps somebody else, because I myself would have appreciated instructions like these.