Based on my last blog I got an idea to investigate could I automate extraction of data from similar web sites with Power BI. I doing this blog in English, because it’s a little bit more technical.

As a starter I decided to automate extraction of Liiga results done in previous blog when I realized it’s possible. Earlier I copied data from web site to Excel, from where I downloaded data into Power BI. Then I realized I could do direct extraction from web site with Power BI.

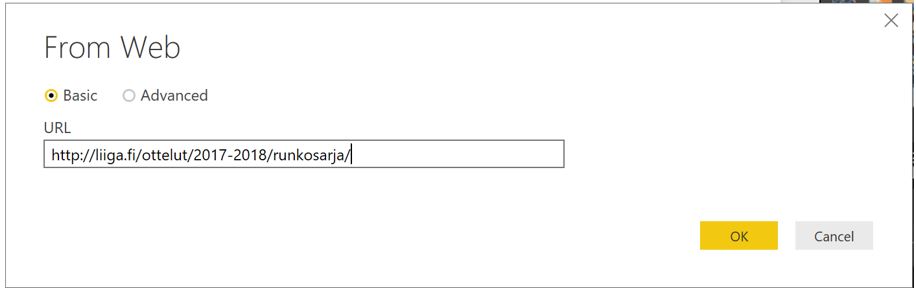

From Power BI you can find Web Source.

Give your URL:

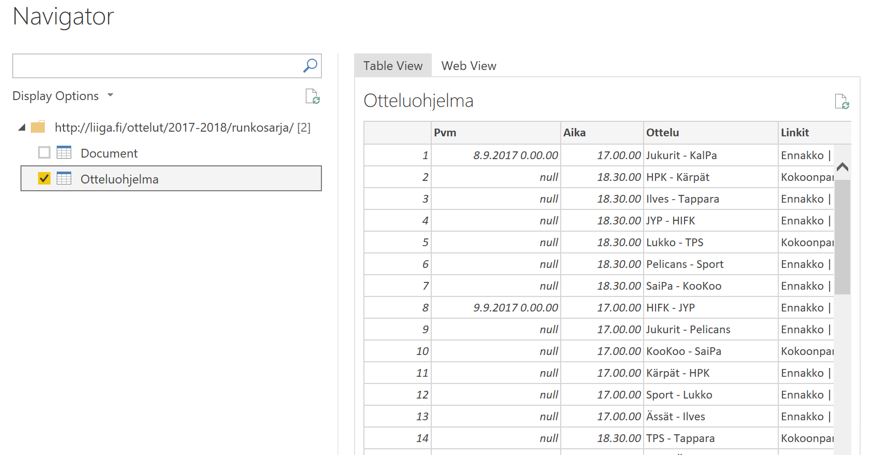

After that you select correct table if there are many. Table like data are shown as own tables with preview possibility, which is quite handy:

Select Edit and do your changes as previously.

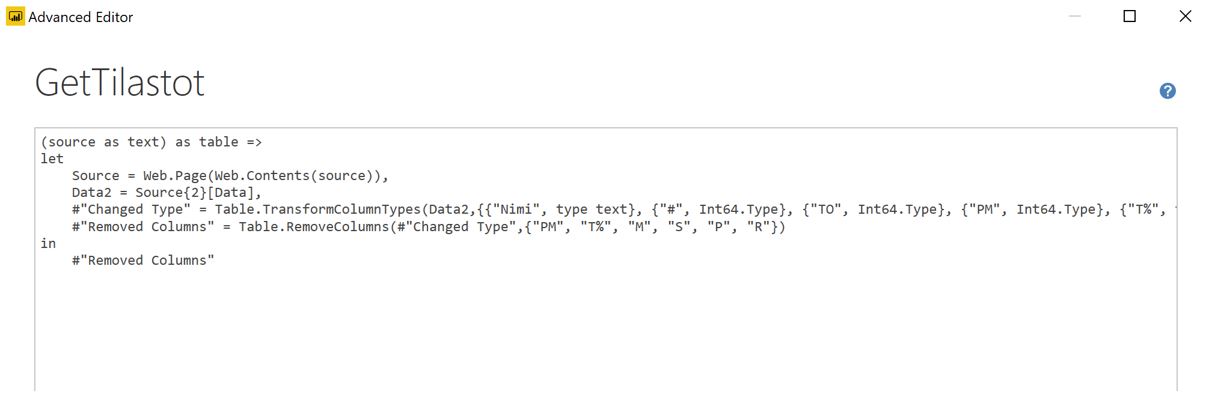

I had to visit also Advanced Editor to correct a little bit M-code. Or actually I added one filter to remove not yet played games. But I learned later I could have done that also without editing M-code.

After that the actual beef – how could I get goalie save stats automatically from different sub pages. Address format of those pages were: http://liiga.fi/ottelut/2017-2018/runkosarja/1/tilastot/ where that game number (1 in this case) changes. Under that url, you can find many tables and two of those contain goalie saves (home and away team).

First I thought to use SQL Server Integration Services, because of its looping capabilities. But to read web pages there is no off the shelf component. Zappy Sys has one, but it costs $600. I could have used 30 days trial version, but decided to look for alternative.

It seems I could have used Python or R, but decided to look for Power BI option. For a start it looked difficult, but then I found a way. Don’t remember anymore, which web site had good instructions.

First I extracted goalie save stats from one of those sub pages as a web table as previously. Then I constructed a function from that query by changing original ”Source =” row to use a parameter. Original Source-row:

Source = Web.Page(Web.Contents(”http://liiga.fi/ottelut/2017-2018/runkosarja/94/tilastot/”))

Below edited code. That beginning before let-part changes source as a function.

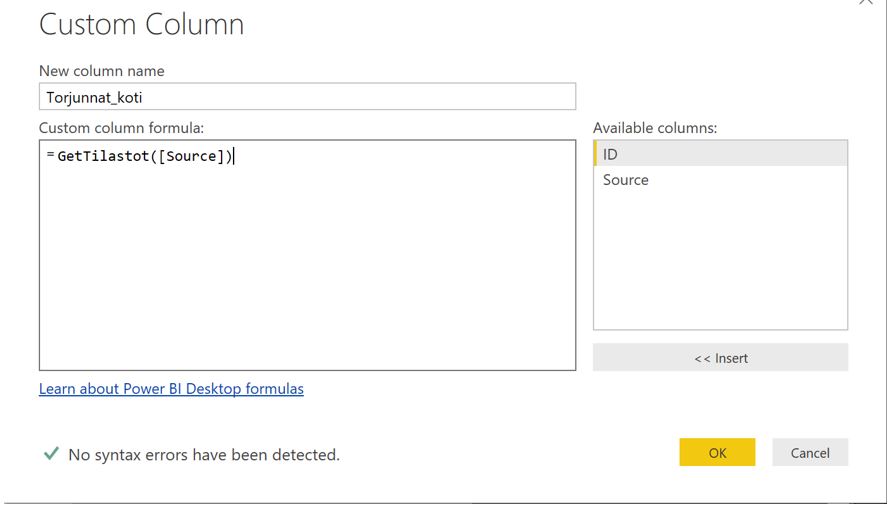

After that I created a table in Excel, which included those used url-addresses.

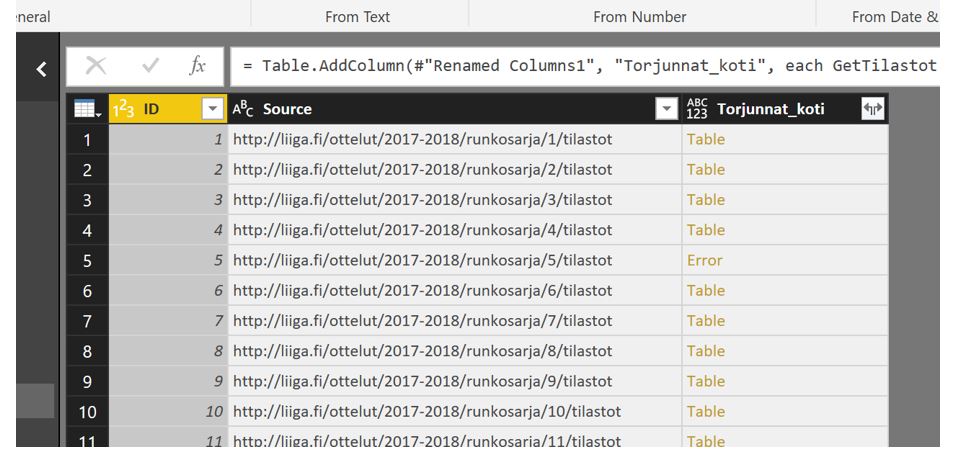

I downloaded this into Power BI and added a custom column, which calls just created function. Url-address is given as a parameter, which can be found from column named ”Source”.

End result – I managed to download all the required tables: 🙂

With few pages I had some challenges. There was no data (e.g. row 5). I decided to do error handling another time and just removed those rows (”Remove error rows”). I opened those tables by clicking mark on top right corner of that column, which created two rows per game. One row for each goalie in the roster.

Then the same to away-team’s saves. As an end result I had four rows per one game.

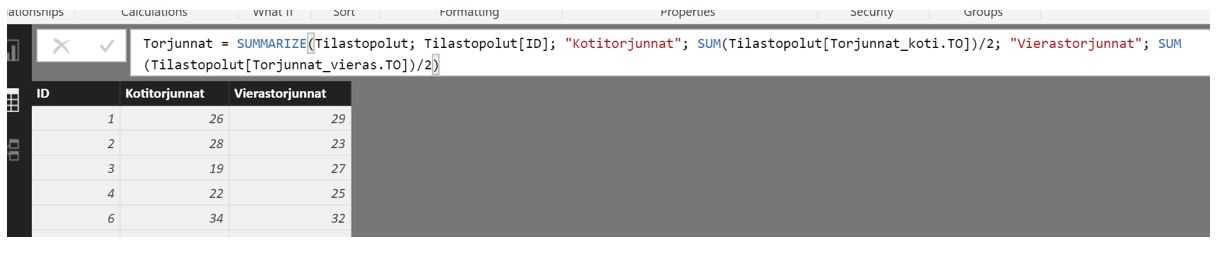

Finally I created a new table with DAX from these rows where I summarized saves together. Sums I divided by two to get correct end results. Something to do with double rows.

Next I will do the same to season 2016-17 games and add season to ID to be able to combine results. Actually I have to add goalie saves goals also to get correct shots on goal number. After that I can start investigating can I use those to forecast game results using machine learning.😊.

P.S. Beginning in my betting has not been successful. First R model seems not to be money making machine. Let’s see if I can create something new.